- Latest Legal News

- News

- Dealstreet

- Viewpoint

- Columns

- Interviews

- Law School

- Legal Jobs

- हिंदी

- ಕನ್ನಡ

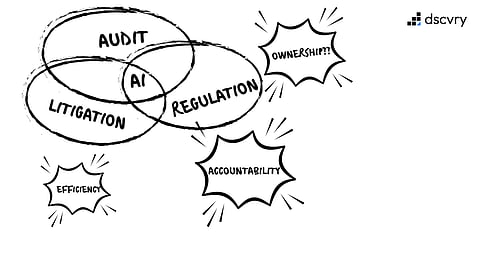

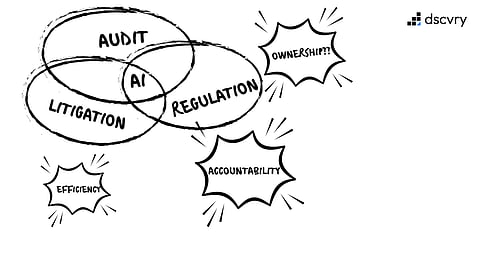

At a recent roundtable with legal, risk, and audit leaders from India's top enterprises, a consistent concern emerged: AI adoption is accelerating faster than accountability frameworks are maturing.

AI systems are now embedded in credit approvals, contract review, compliance monitoring, and supplier evaluation. These systems influence outcomes that carry legal and financial consequences. Yet when such systems operate in production, responsibility for individual decisions often diffuses across technology teams, vendors, and business functions.

For regulators, auditors, and courts, this is unacceptable. Accountability must remain attributable.

The central question is no longer whether AI improves efficiency. It is whether its decisions can be explained, evidenced, and defended under scrutiny.

For Indian companies operating globally, the rules for AI governance are messy. It is like trying to drive a car through three different countries, each with different traffic laws, all at the same time.

India: The Digital Personal Data Protection Act, 2023 (DPDP Act) centres on the protection of personal data. To comply, organizations must establish robust data provenance frameworks to prove lawful consent was obtained for data used in AI models.

Europe: The EU AI Act emphasizes safety through risk categorization. Systems classified as "high-risk" require mandatory human oversight, algorithmic transparency, conformity assessment, and post-market monitoring obligations.

The U.S.: The focus is on liability exposure. AI outputs, logs, and model documentation can become evidentiary records.

A system compliant in one jurisdiction may fail requirements in another. Governance therefore must be designed to meet the strictest common denominator, not the minimum local standard.

This complexity isn’t just about compliance; it’s also about protecting the business, the board, and the brand.

Many organizations implement Human-in-the-Loop (HITL) protocols as a safeguard against this, where a person checks the AI’s decision before it goes out. However, HITL often fails in practice due to automation bias, a well-documented psychological phenomenon where humans over-rely on automated systems and cease meaningful monitoring.

The review becomes passive, when a human reviewer, incentivized by speed and fatigued by volume, clicks "Approve" on AI-generated outputs.

Regulators increasingly recognize this as "rubber-stamping." If reviewers cannot articulate the rationale behind an AI decision or have authority to override outputs and demonstrate genuine deliberation, the human oversight does not, by itself, satisfy regulatory expectations.

This is why we are seeing leading enterprises, redesigning decision flows so that AI informs rather than determines outcomes. Firms are now working with partners in designing systems where AI is in the loop, and a human still owns the decision. Instead of an algorithm making the decisions that a human merely validates, AI highlights risks, surfaces insights, or shows inconsistencies and a trained professional still makes the call.

This puts the responsibility and the judgment back in human hands, forcing the operator to engage cognitively with the data rather than rubber-stamping a machine's conclusion.

Crucially, it also produces defensible artifacts: human rationale, contextual notes, and audit trails.

This shift puts General Counsels (GCs), Compliance and Risk Officers in a new seat. One of the changes we are seeing is leadership getting involved early. Their role is to define the "Risk Threshold", especially in scenarios where deliberate decision-making outweighs the efficiency gains of full automation, for which, the key requirements are:

Data lineage

Traceable sources, lawful basis for processing, and documented consent where required.

Model provenance

Version control for models, prompts, and configurations so outputs can be reproduced.

Decision traceability

Ability to reconstruct which inputs, model versions, and parameters produced a specific result.

Immutable logging

Tamper-resistant records suitable for regulatory or judicial review.

Structured documentation

Model Cards, impact assessments, and risk analyses that translate technical behaviour into legal terms.

These practices convert AI from a “black box” into an auditable system of record.

This proactive governance isn’t just about risk avoidance. It’s also about building institutional trust.

AI governance is no longer a purely technical matter. It sits at the intersection of legal liability, enterprise risk, and fiduciary duty, and it is increasingly demanding board attention.

Under evolving professional standards, including ABA Model Rule 1.1 (duty of technological competence) in the United States and emerging frameworks in India, legal leaders must maintain sufficient technical literacy to fulfil their professional obligations.

This evolution has prompted specialized workshops for legal, risk, and audit teams, not technical training, but interpretive frameworks that translate AI risk into actionable governance. These sessions help legal leaders understand AI risk vocabulary, establish governance requirements for technology teams, draft internal " AI risk policies," to evaluate vendor systems through demanding artifacts like algorithmic impact assessments and probe deeper into explainability, oversight and defensibility.

The roundtable discussions highlighted a critical accountability gap: business leaders often view AI as an IT initiative, while IT leaders view it as a business enabler, leaving the risk unowned.

Legal/Risk teams, who historically acted as advisors, are now expected to be "gatekeepers", certifying that probabilistic, non-deterministic systems meet safety and compliance standards.

They are prepared to assume these responsibilities but require early engagement. They need systems that are operationally safe, explainable under examination, and resilient to challenge. This demands fluency in reviewing Model Cards and Algorithmic Bias Audits with appropriate scrutiny.

This capability does not emerge from generic tools or one-size-fits-all platforms. It requires building AI systems with appropriate governance structures embedded from inception, aligned with emerging standards such as ISO/IEC 42001 for AI Management Systems.

The consensus was clear: the Business Unit Heads must be Accountable for ownership of AI outputs, just as they would be for a human employee's output, while Legal/Risk holds governance and approval authority over high-risk deployments.

Enterprise AI systems will increasingly be examined by regulators, auditors, and courts. Efficiency gains alone are insufficient. Organizations must demonstrate that automated processes are explainable, reproducible, documented, and accountable to identifiable decision-makers.

In short, AI must be defensible by design.

This requires a forensic clarity of ownership, redefinition of the "guardian" role, and the implementation of architectures capable of withstanding litigation.

Interested in empowering your legal, compliance, audit and risk teams? Let’s schedule a brief conversation about our workshops.

To Know more, connect@dscvryai.com

DscvryAI is an enterprise AI systems firm founded by operators from Deloitte, Accenture, and HSBC. We build legal intelligence infrastructure that transforms contracts, matters, and precedent into structured, queryable knowledge. Our Axiom platform is trusted by legal teams across India, and UK.